Models and Datasets of Document AI

1.Optical Character Recognition

Turning typed, handwritten, or printed text into machine-encoded text is known as Optical Character Recognition (OCR). It’s a widely studied problem with many well-established open-source and commercial offerings.

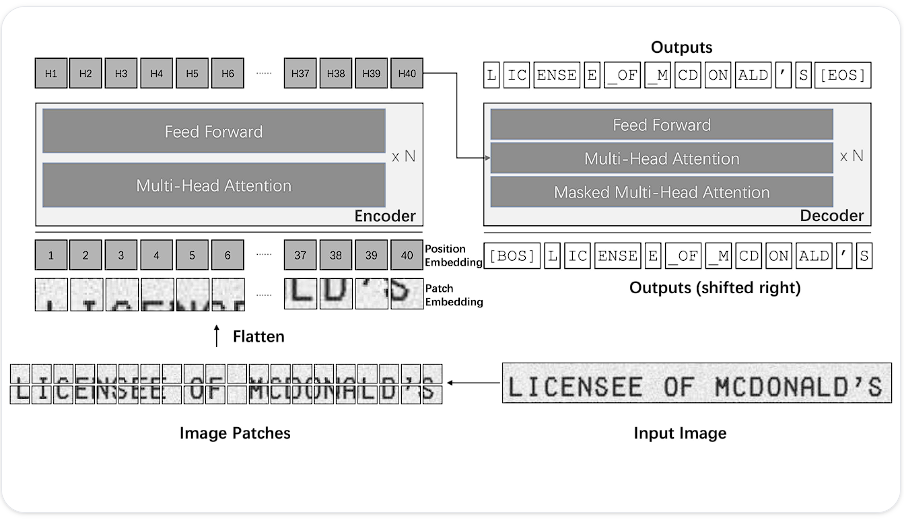

TrOCR: Transformer-based Optical Character Recognition with Pre-trained Models

The article “TrOCR: Transformer-based Optical Character Recognition with Pre-trained Models” introduces a novel OCR model called TrOCR. This model leverages pre-trained Transformer architectures for both image understanding and text generation, eliminating the need for CNNs and RNNs typically used in OCR systems. TrOCR uses pre-trained image Transformers (e.g., ViT, DeiT, BEiT) as encoders and pre-trained text Transformers (e.g., BERT, RoBERTa, MiniLM) as decoders, simplifying the model structure and enhancing performance. The model is trained in two stages: pre-training on large-scale synthetic data and fine-tuning on human-labeled datasets. TrOCR achieves state-of-the-art results on various OCR benchmarks, including printed, handwritten, and scene text recognition tasks. The models and code are publicly available for further research and development.

Compared to other OCR models, TrOCR stands out by completely eliminating the use of CNNs and RNNs, which are traditionally used in image understanding and text generation tasks, respectively. Most existing OCR models rely on CNNs for feature extraction and RNNs for sequence modeling, often requiring additional language models for improved accuracy. TrOCR’s use of pre-trained Transformers for both tasks simplifies the architecture and leverages the advantages of large-scale pre-training. This distinguishes TrOCR from other models and showcases its potential for achieving superior performance with a more streamlined approach.

The TrOCR article provides valuable insights that can be directly applied to the field of PDF conversion, especially in the context of extracting and recognizing text from PDF documents.

End-to-End Text Recognition

TrOCR’s approach of using an end-to-end Transformer-based model for OCR can streamline the PDF conversion process. Traditional PDF conversion systems might rely on separate stages for text detection and recognition. TrOCR’s unified approach can potentially simplify the pipeline, making it more efficient and less prone to errors.

Elimination of CNN and RNN Dependencies

TrOCR eliminates the need for CNNs and RNNs, which are commonly used in OCR tasks. This can reduce the complexity of your PDF conversion system, making it easier to implement and maintain.

Pre-training and Fine-tuning Strategy

The two-stage training process of TrOCR, involving pre-training on synthetic data and fine-tuning on human-labeled datasets, can be adapted to PDF conversion.

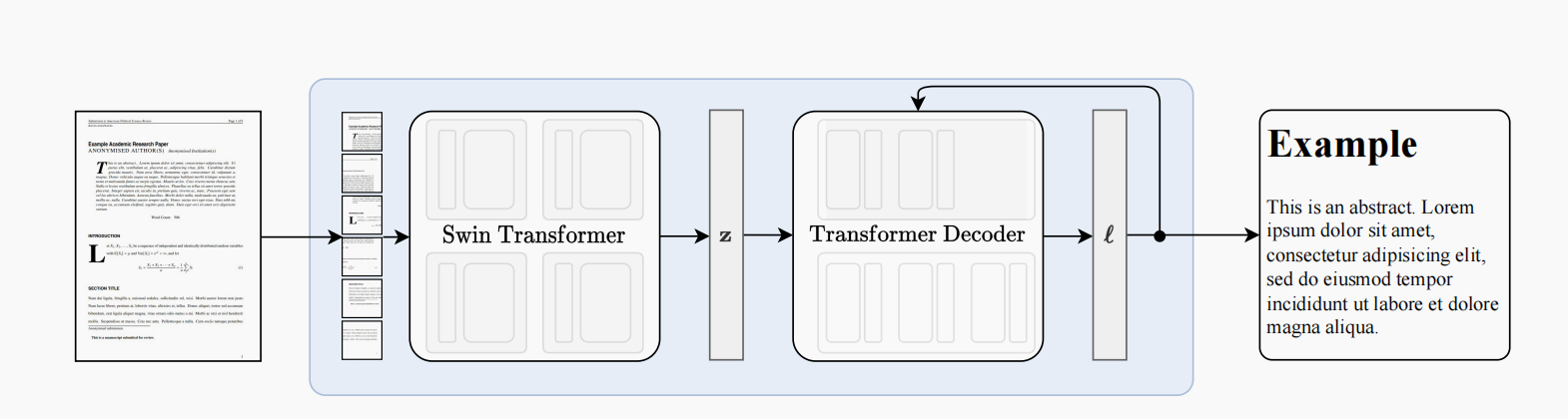

Nougat: Neural Optical Understanding for Academic Documents

Nougat (Neural Optical Understanding for Academic Documents) is a Visual Transformer model designed to perform Optical Character Recognition (OCR) on scientific documents, converting them into a markup language. This approach addresses the challenge of semantic information loss in PDFs, particularly for mathematical expressions. The model uses a Swin Transformer encoder to process document images into latent embeddings, which are then converted to a sequence of tokens in an autoregressive manner. The primary contributions of the paper include:

- The release of a pre-trained model capable of converting PDFs to a lightweight markup language.

- An approach that only depends on the image of a page, making it applicable to scanned papers and books.

The aims of Nougat—to improve the accessibility and searchability of scientific documents by converting them into machine-readable text—are well-supported by the methodology and results. The choice of a transformer-based model, known for its effectiveness in sequence-to-sequence tasks, is apt for OCR tasks involving complex structures like mathematical expressions. The results demonstrate that the model can effectively convert PDFs into a useful markup language.

Compared to existing OCR tools like Tesseract OCR, which processes text line-by-line, Nougat’s transformer-based approach offers a more holistic understanding of the document’s structure. It avoids the common pitfalls of traditional OCR methods, such as misinterpreting superscripts and subscripts, making it more suitable for scientific documents. The use of an end-to-end architecture also sets it apart from models that rely on third-party OCR engines, providing a more integrated solution.

2.Multi Functional Document AI

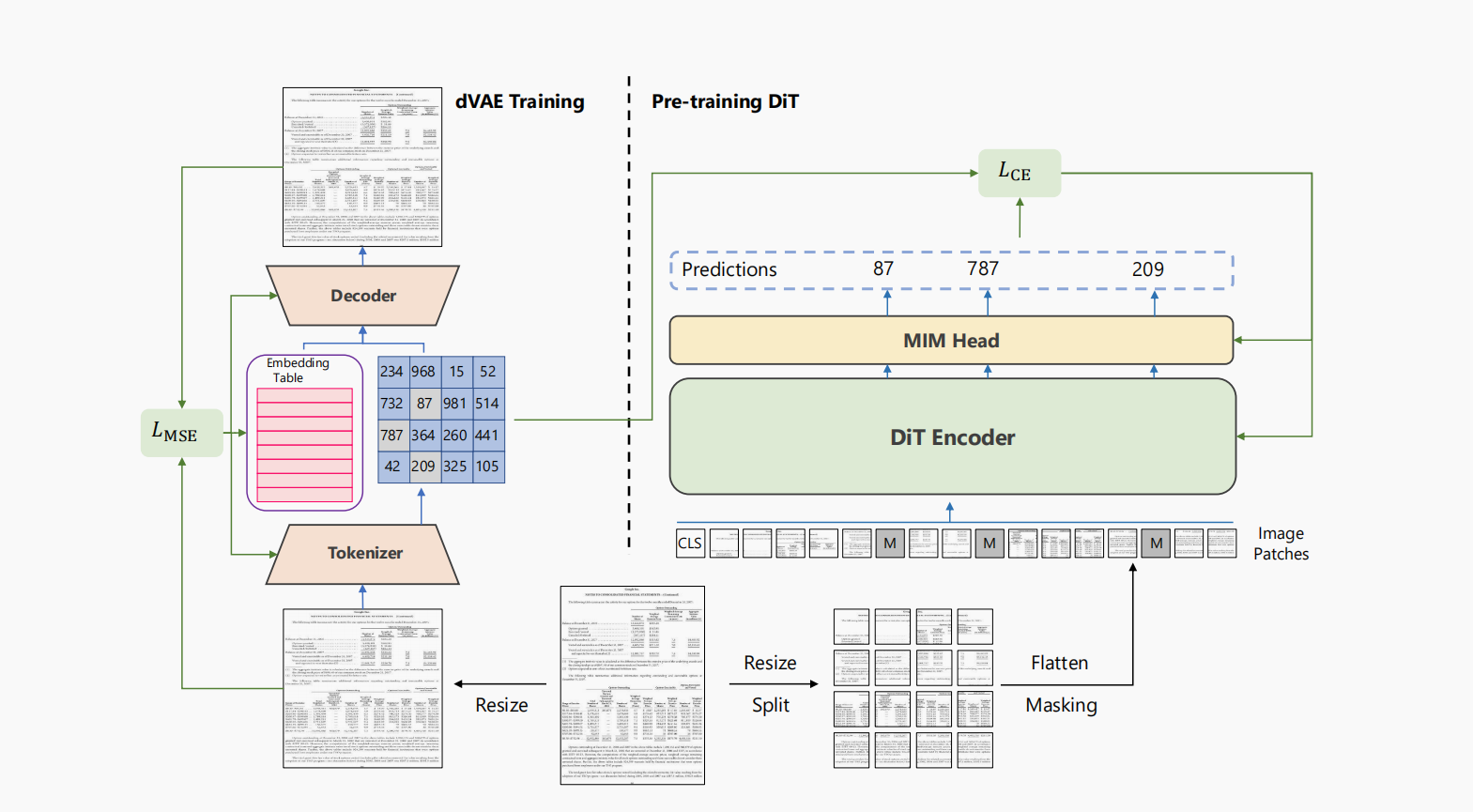

DiT: Self-supervised Pre-training for Document Image Transformer

function: document image classification, layout analysis, table detection, and OCR text detection.

DiT (Document Image Transformer) is a self-supervised pre-trained model designed for Document AI tasks. Leveraging the Transformer architecture, DiT focuses on document image understanding by pre-training on a large-scale dataset of 42 million unlabeled document images. The model employs Masked Image Modeling (MIM) as its pre-training objective, similar to the BEiT model, to predict visual tokens from corrupted input images. This approach allows DiT to learn the global patch relationships within document images without relying on human-labeled data. The pre-trained DiT model has been fine-tuned and tested on several Document AI benchmarks, achieving state-of-the-art results in tasks such as document image classification, layout analysis, table detection, and OCR text detection.

By adopting a self-supervised learning approach, the authors effectively address the scarcity of large-scale human-labeled document image datasets. Training on 42 million unlabeled document images using MIM allows the model to capture intricate details and relationships in the document layout, making it versatile for various Document AI tasks. The choice of using a Transformer architecture aligns well with recent advancements in natural image processing and leverages the strengths of multi-head attention mechanisms to process image patches effectively.

Compared to other Document AI approaches, DiT stands out for its self-supervised learning methodology and its application of the Transformer architecture to document images. Traditional models often rely on supervised learning with manually annotated datasets, which can be limiting in terms of scalability and adaptability to different document types. DiT’s approach of pre-training on a massive unlabeled dataset sets it apart by addressing these limitations. Additionally, the state-of-the-art results achieved by DiT across multiple benchmarks underscore its superiority over conventional models that may not leverage such extensive pre-training techniques.

One potential area for further exploration could be the adaptation of DiT to multilingual document processing, given the diverse range of languages and scripts in real-world documents. This could enhance its applicability and robustness in global contexts.

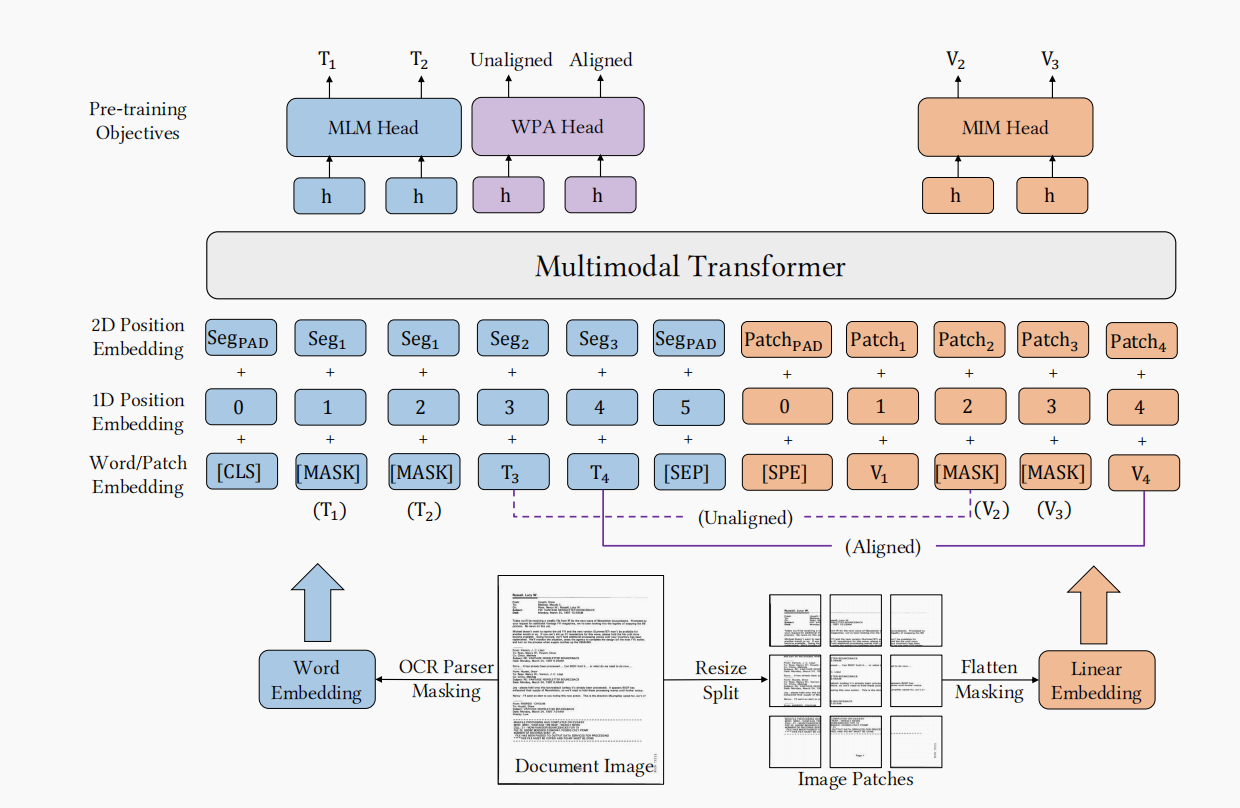

LayoutLMv3: Pre-training for Document AI with Unified Text and Image Masking

function: form understanding, receipt understanding, document visual question answering, document image classification, document layout analysis

LayoutLMv3 is a pre-trained multimodal model designed for Document AI, which integrates text and image masking to improve multimodal representation learning. Unlike previous multimodal pre-trained models that have different pre-training objectives for text and image modalities, LayoutLMv3 uses a unified approach, simplifying the architecture and enhancing performance. Key features and contributions include:

- Unified Architecture and Pre-training Objectives: LayoutLMv3 employs unified text and image masking objectives, including Masked Language Modeling (MLM), Masked Image Modeling (MIM), and Word-Patch Alignment (WPA), to learn multimodal representations effectively.

- Experimental Validation: The model achieves state-of-the-art performance on both text-centric tasks (form understanding, receipt understanding, document visual question answering) and image-centric tasks (document image classification, document layout analysis).

- Parameter Efficiency: The model does not rely on pre-trained CNN or Faster R-CNN backbones for visual features, significantly reducing the number of parameters and eliminating the need for region annotations.

The methodology used in LayoutLMv3 is highly appropriate for the goals of the study. By unifying text and image masking objectives, the authors address the common issue in multimodal representation learning where different objectives for text and image modalities can hinder the learning process. This unified approach is not only innovative but also practical, as it simplifies the training process and improves cross-modal alignment.

The aims of the study are to improve multimodal representation learning for Document AI tasks by using a unified text and image masking approach. The methodology directly supports this aim by employing MLM, MIM, and WPA objectives within a single architecture. The results validate the effectiveness of this approach, as LayoutLMv3 achieves state-of-the-art performance across various benchmarks, demonstrating that the unified methodology successfully meets the study’s aims.

Compared to other models in the same domain, such as DocFormer and SelfDoc, LayoutLMv3 stands out due to its unified pre-training objectives and its avoidance of reliance on CNN-based backbones. This makes LayoutLMv3 more efficient in terms of parameter usage and training complexity. Additionally, its performance on both text-centric and image-centric tasks surpasses that of other models, highlighting its robustness and versatility.

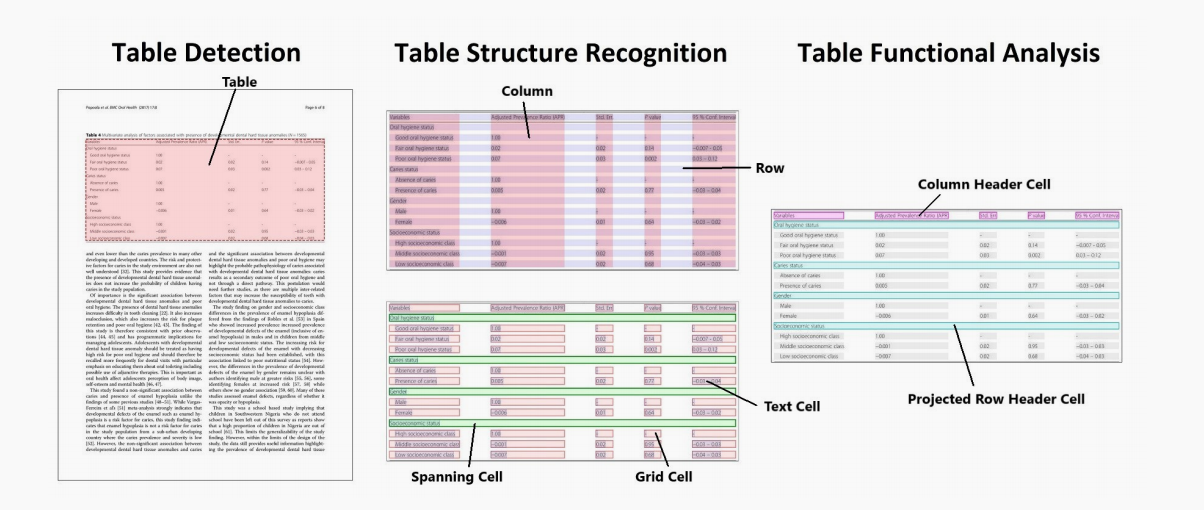

Table Transformer (TATR)

TATR is an advanced deep learning model designed for object detection specifically tailored to extract tables from image inputs. Introduced in the context of the PubTables-1M project. It operates by leveraging object detection principles to achieve robust table recognition.

TATR’s methodology, based on object detection using Transformer architecture, aligns well with current trends in computer vision, particularly in handling complex document structures like tables. The use of Transformer models allows for holistic understanding of table relationships within documents, enhancing accuracy and adaptability across different domains.

The paper effectively integrates its aim of comprehensive table extraction with a Transformer-based object detection approach. By focusing on direct set prediction and leveraging global context via bipartite matching, TATR achieves notable advancements in table recognition accuracy without the traditional anchor-based mechanisms.

Compared to other methods in table extraction, TATR’s use of Transformers for object detection represents a significant leap forward. It demonstrates competitive performance comparable to well-established detectors like Faster R-CNN while simplifying the detection pipeline and improving inference efficiency.

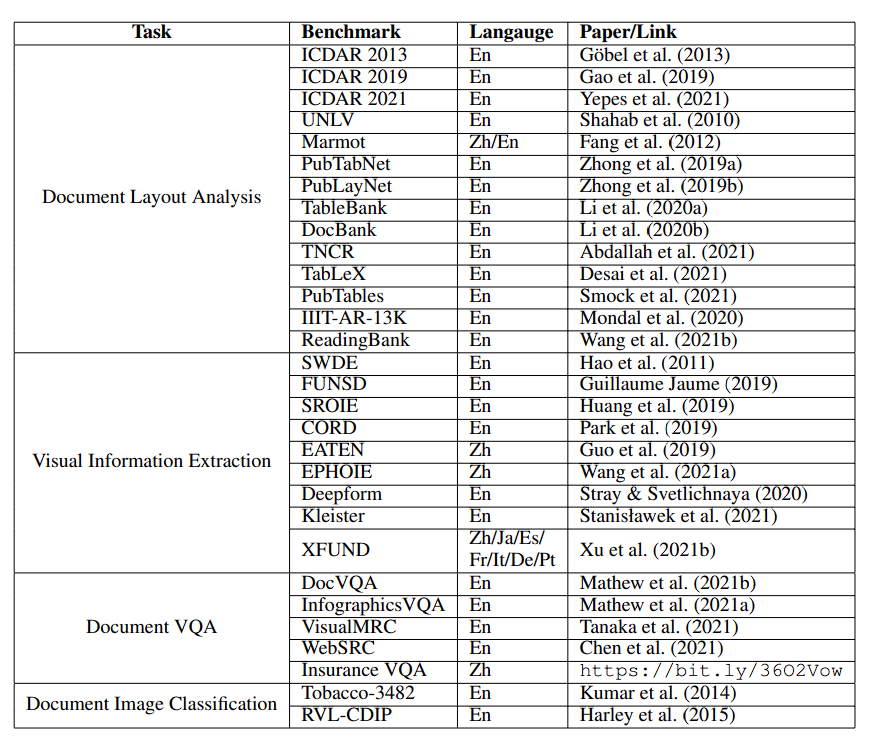

3.Datasets

https://github.com/HCIILAB/M6Doc

https://github.com/DS4SD/DocLayNet

https://github.com/doc-analysis/TableBank

https://github.com/buptlihang/CDLA

https://github.com/ibm-aur-nlp/PubLayNet

https://github.com/360AILAB-NLP/360LayoutAnalysis

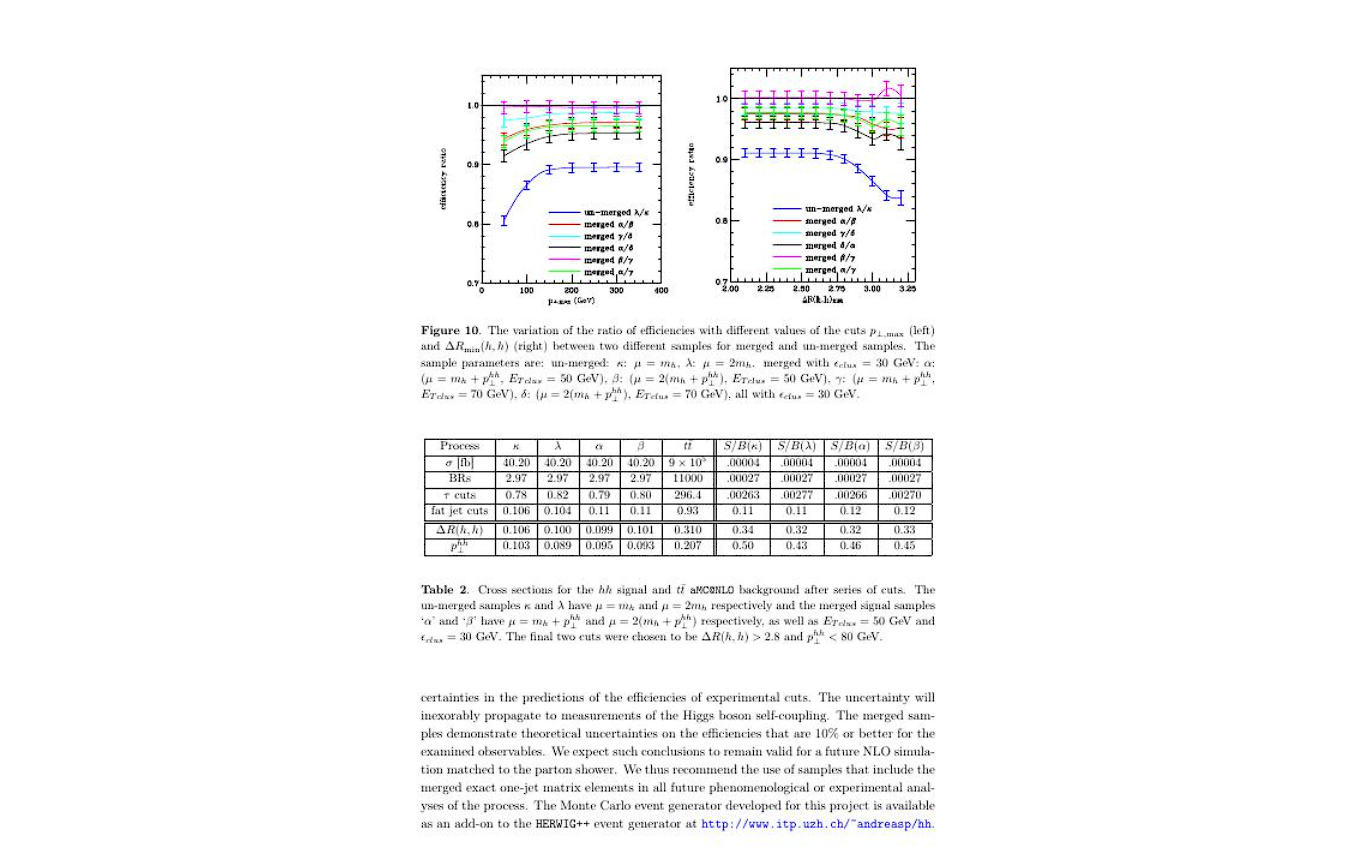

PubTables-1M: Towards comprehensive table extraction from unstructured documents

PubTables-1M is a comprehensive dataset developed for table structure recognition (TSR) and table detection (TD) tasks in document analysis. It comprises nearly a million tables sourced from the PMC Open Access (PMCOA) corpus, each annotated with spatial and semantic information derived from PDF and XML representations of scientific articles. The dataset addresses challenges such as aligning text from PDFs with structured XML data, normalizing table structures to correct over-segmentation, and applying quality control measures to ensure annotation accuracy.

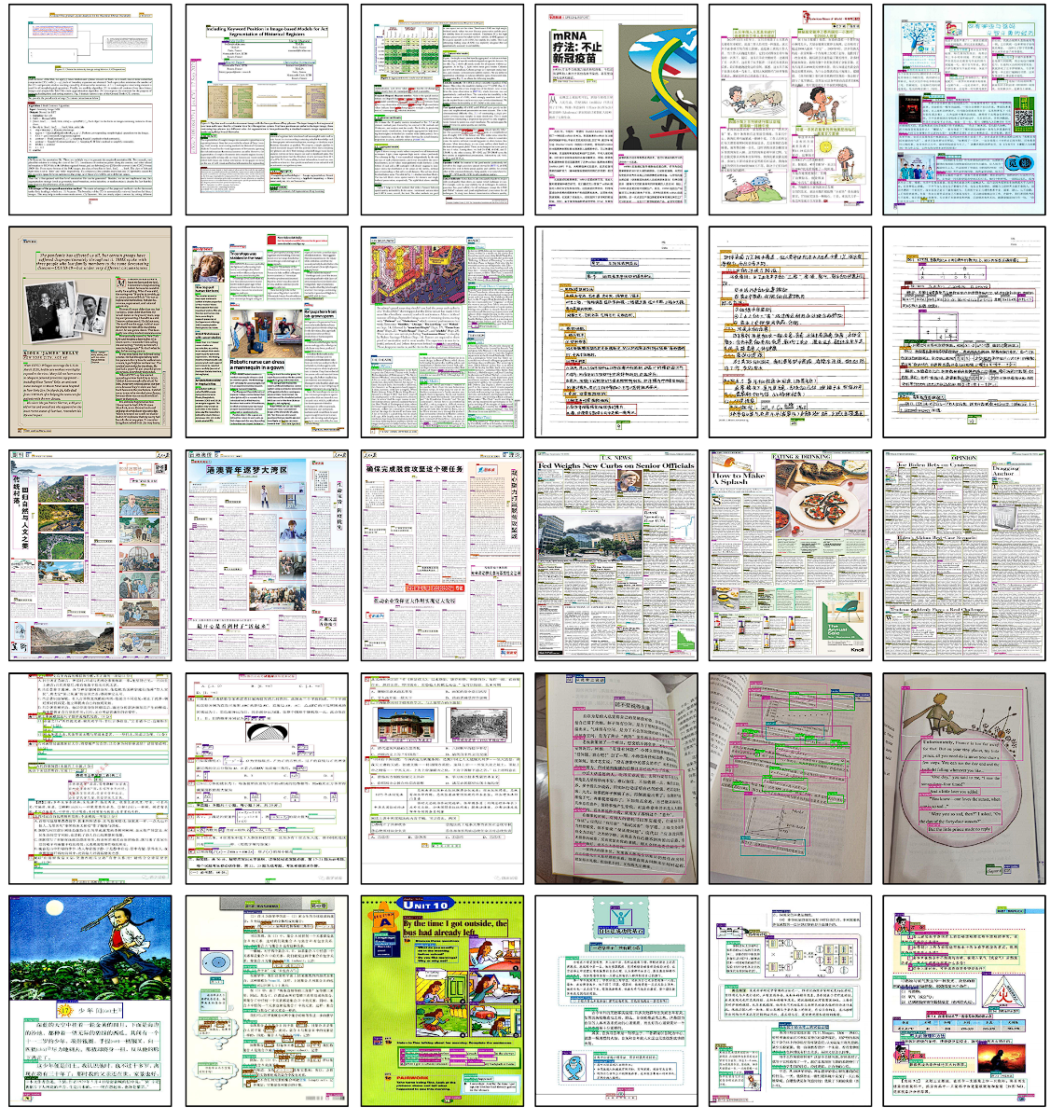

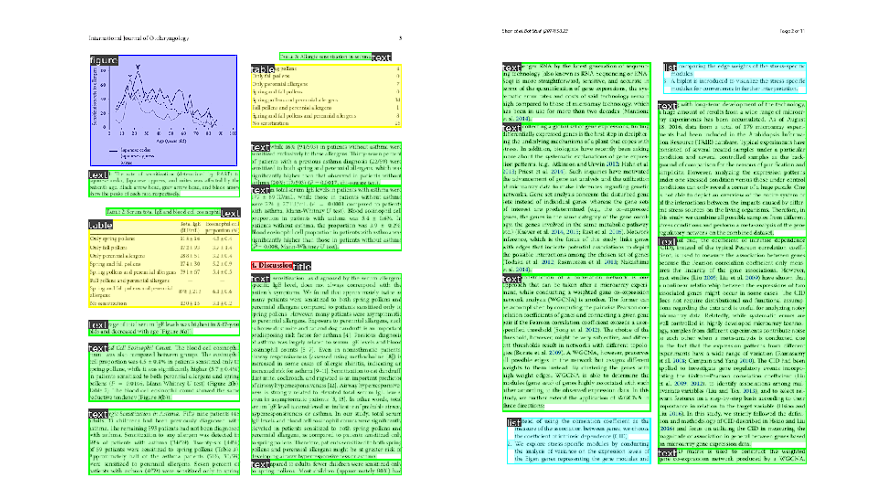

M6Doc Dataset

The M6Doc dataset for the research of document layout analysis in Modern Document. It contains a total of 9,080 modern document images, which are categorized into seven subsets, i.e., scientific article (11%), textbook (23%), test paper (22%), magazine (22%), newspaper (11%), note (5.5%), and book (5.5%) according to their content and layouts. It contains three formats: PDF (64%), photographed documents (5%), and scanned documents (31%). The dataset includes a total of 237,116 annotated instances.

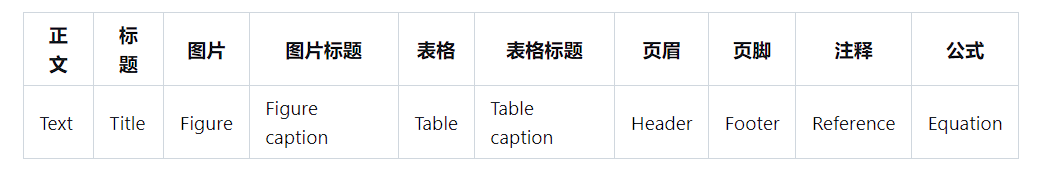

DocLayNet

DocLayNet is a human-annotated document layout segmentation dataset containing 80863 pages from a broad variety of document sources. It provides page-by-page layout segmentation ground-truth using bounding-boxes for 11 distinct class labels on 80863 unique pages from 6 document categories. It provides several unique features compared to related work such as PubLayNet or DocBank:

- Human Annotation: DocLayNet is hand-annotated by well-trained experts, providing a gold-standard in layout segmentation through human recognition and interpretation of each page layout

- Large layout variability: DocLayNet includes diverse and complex layouts from a large variety of public sources in Finance, Science, Patents, Tenders, Law texts and Manuals

- Detailed label set: DocLayNet defines 11 class labels to distinguish layout features in high detail.

- Redundant annotations: A fraction of the pages in DocLayNet are double- or triple-annotated, allowing to estimate annotation uncertainty and an upper-bound of achievable prediction accuracy with ML models

- Pre-defined train- test- and validation-sets: DocLayNet provides fixed sets for each to ensure proportional representation of the class-labels and avoid leakage of unique layout styles across the sets.

TableBank

TableBank is a new image-based table detection and recognition dataset built with novel weak supervision from Word and Latex documents on the internet. The TableBank dataset totally consists of 417,234 high quality labeled tables as well as their original documents in a variety of domains.

CDLA

CDLA is a Chinese Document Layout Analysis dataset, targeted at scenarios involving Chinese literature (papers). It includes the following 10 labels:

PubLayNet

PubLayNet is a large dataset of document images, of which the layout is annotated with both bounding boxes and polygonal segmentations. The source of the documents is PubMed Central Open Access Subset (commercial use collection). The annotations are automatically generated by matching the PDF format and the XML format of the articles in the PubMed Central Open Access Subset.

360LayoutAnalysis

360LayoutAnalysis uses manual annotation to refine and optimize the data of research papers with fine-grained labels, constructing a fine-grained layout analysis dataset for research report scenarios.

Main features:

- Covers three vertical fields: Chinese research papers, English research papers, and Chinese research reports, along with a general scenario model.

- Lightweight and fast inference (trained with YOLOv8, single model size is 6.23MB).

- Chinese research paper scenario includes paragraph information.

- Chinese research report scenario/general scenario.